Chinese lab targets one of AI’s biggest bottlenecks with bidirectional parallelism and dynamic load balancing.

Training large AI models has always been a high-stakes game of computational Tetris—fitting layer after layer of parameters across GPUs without leaving processors idle or networks clogged. This week, Chinese AI lab DeepSeek unveiled two open-source tools that could rewire how developers approach this challenge: DualPipe, a bidirectional pipeline parallelism algorithm designed to eliminate GPU downtime, and EPLB, an expert-parallel load balancer for Mixture-of-Experts (MoE) models. Released during Day 4 of DeepSeek’s Open Source Week alongside compute-communication overlap analysis tools, these innovations aim to slash training times while cutting costs—and they’re free to use under MIT licenses.

Breaking the pipeline bottleneck

Traditional pipeline parallelism splits AI models into chunks assigned to separate GPUs. But this approach leaves gaps where devices sit idle waiting for others to finish their tasks—a problem known as “bubbles.” For models like DeepSeek’s own V3 or its reasoning-focused R1, cross-node communication bottlenecks compound the issue when scaling across multiple machines.

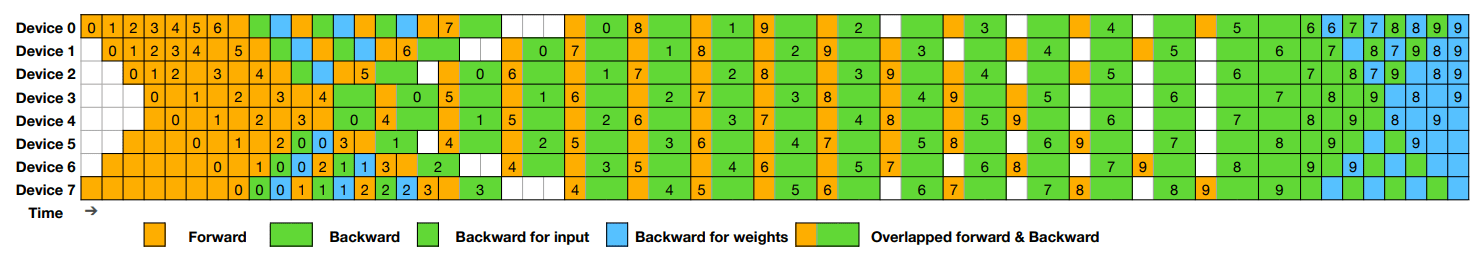

DualPipe attacks this inefficiency head-on by overlapping computation and communication phases bidirectionally. Imagine a factory conveyor belt that moves widgets forward and backward simultaneously: While one GPU crunches numbers for a forward pass, another handles backpropagation data from a previous batch. According to DeepSeek’s technical documentation, this approach reduces pipeline bubbles by up to 40% compared to standard 1F1B (one forward pass followed by one backward pass) methods.

The system’s secret sauce lies in its symmetric scheduling across eight or more GPUs—a pattern visualized in DeepSeek’s training diagrams. By mirroring micro-batches in reverse order (batch 20 processed immediately after batch 1, for instance), it keeps all devices humming with minimal waiting time between operations.

Taming the MoE beast

While DualPipe streamlines general model training, EPLB (Expert-Parallel Load Balancer) targets a specific architectural trend: MoE models like Mixtral that activate subsets of “expert” sub-networks per input. These systems often suffer from lopsided workloads where popular experts overwhelm their GPUs while others gather digital dust.

EPLB acts as a real-time traffic cop for these scenarios. By dynamically redistributing experts across devices based on demand—without requiring auxiliary loss functions or complex tensor parallelism—it claims to boost throughput by 15-25% in internal benchmarks. Developers can see its balancing mechanics in action through visual comparisons of GPU utilization before and after applying EPLB.

This isn’t just theoretical optimization. During tests with biological simulation models, DeepSeek reports cutting training times from months to weeks by combining EPLB with DualPipe’s overlap capabilities. The tools also complement earlier releases like DeepGEMM for matrix math acceleration and FlashMLA for faster decoding on NVIDIA Hopper GPUs.

The efficiency arms race heats up

While benchmarks suggest impressive gains—DualPipe reportedly achieves 90% computation overlap efficiency in optimal configurations—real-world results will depend on factors like network bandwidth and model architecture. The system currently requires PyTorch 2.x and performs best on NVIDIA GPUs connected via high-speed interconnects like InfiniBand.

For developers racing to deploy next-gen AI agents, that distinction could mean entering production months ahead of rivals—or avoiding cloud bills that bankrupt startups before their first demo day.