For years, the architecture powering ChatGPT, Claude, and other large language models has followed a well-trodden path: auto-regressive transformers that predict words sequentially, left to right. But a Silicon Valley startup’s unconventional approach—borrowing techniques from image generators like Stable Diffusion—could rewrite the rulebook for AI text generation. Inception Labs’ newly released Mercury model family leverages diffusion to generate text 10 times faster than conventional models while claiming improved accuracy and controllability.

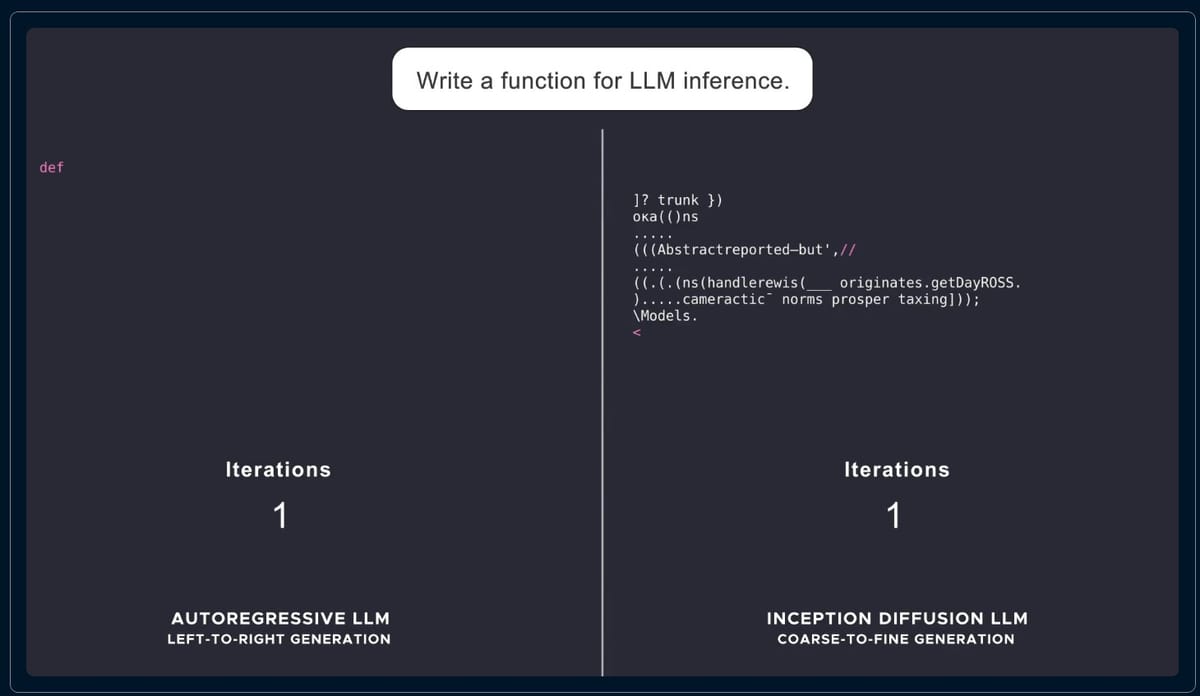

The emergence of diffusion-based LLMs marks the first credible challenge to the auto-regressive orthodoxy that’s dominated AI text generation since GPT-2. Where traditional models generate text like a court stenographer transcribing words in strict sequence, Mercury starts with “noise” and refines it into coherent responses through parallel processing—a method more akin to sculpting text from digital marble. Early benchmarks suggest this approach enables speeds surpassing 1,000 tokens per second on NVIDIA’s flagship H100 GPUs, outpacing even OpenAI’s optimized GPT-4o Mini.

Unshackling text from sequential thinking

Diffusion models made their name in visual AI by gradually adding noise to images during training, then learning to reverse the process to generate new visuals. Applying this to text requires mapping words to a mathematical “latent space” where meaning exists as multidimensional relationships rather than discrete tokens. As Inception Labs’ technical overview explains, Mercury trains on corrupted text—masked or scrambled tokens—then learns to reconstruct coherent sentences by predicting missing elements across entire sequences simultaneously.

This parallel processing capability fundamentally changes the economics of AI text generation. While traditional LLMs bottleneck at ~100 tokens/second due to sequential calculations, diffusion models spread computational load across all tokens at once. The result: Mercury Coder, a coding-specific variant, demonstrates real-time code generation that keeps pace with human typing speeds. For developers, this could mean AI pair programmers that debug code as it’s written instead of waiting for stanzaic suggestions.

Early adopters report unexpected benefits beyond raw speed. Unlike auto-regressive models that often “double down” on incorrect assumptions (the infamous reversal curse), diffusion LLMs refine outputs iteratively—a process researchers compare to breadth-first search algorithms. This allows course correction mid-generation, potentially reducing hallucinations. Inception Labs claims Mercury produces 50% fewer factual errors than comparable auto-regressive models on medical Q&A tasks, though independent verification remains pending.

Industry reactions range from cautious optimism to unbridled enthusiasm. Andrew Ng and Andrej Karpathy have both publicly praised the model’s novel approach.