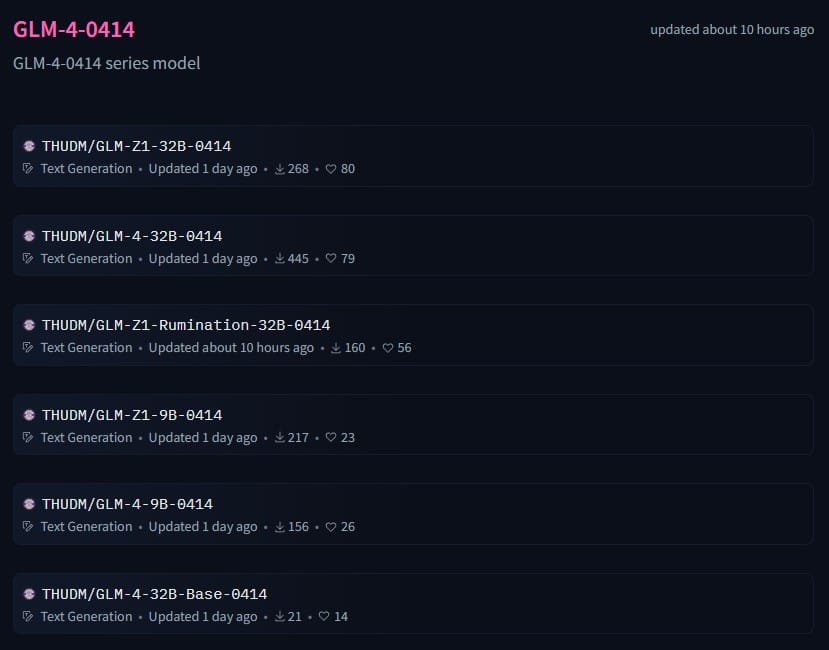

The AI model wars just got spicier with the official release of GLM-4-0414, a new series of large language models (LLMs) from THUDM/Zhipu AI that’s openly available, richly featured, and ready to challenge the open-source status quo. After a bit of a “stealth sneak peek” on GitHub and a flurry of community excitement on Reddit and Hugging Face, the GLM-4-0414 lineup is here with multiple flavors — including models with and without advanced reasoning and rumination capabilities.

The GLM-4-0414 release marks a significant milestone for Zhipu AI, a Chinese AI group that has steadily been building an ecosystem of LLMs with a focus on reasoning, code, and math skills.

These models were publicly surfaced via a GitHub pull request merged on April 14, 2025, which refactored substantial parts of the GLM-4 repo to accommodate the new models. While some demo capabilities (notably for Z1) are still pending, the core weight files and code are now openly accessible on Hugging Face, sparking eagerness among developers and researchers alike.

In the crowded landscape of open LLMs, GLM-4-0414’s combination of scale, reasoning depth, and open accessibility is notable for several reasons:

- Reasoning and Rumination: The Z1 and Z1-Rumination models demonstrate a clear emphasis on deep reasoning, iterative thought, and multi-step problem solving — capabilities often associated with proprietary giants like OpenAI’s GPT-4 or Google’s Gemini. The rumination model’s capacity to “search” and “reflect” during inference is particularly exciting for anyone looking to push open models toward more autonomous, research-style workflows.

- Reinforcement Learning for Enhanced Performance: Incorporating pairwise ranking feedback and graded responses during training reflects a growing trend in fine-tuning LLMs to better align with human preferences and complex task demands beyond mere next-token prediction.

- Resource-Aware Deployment: The 9B variant suggests Zhipu AI is mindful of real-world constraints, offering a model that balances efficiency and capability, suitable for both academic and industrial use cases with limited compute budgets.

- Open WebUI Chat Interface: Behind the scenes, the team has launched chat.z.ai, a chat platform built on open WebUI similar to Qwen Chat, which will soon incorporate these new models. This hints at a growing ecosystem aiming to democratize access not only to LLM weights but also to user-friendly interactive deployments.

GLM-4-32B-0414 performs admirably:

| Model | IFEval | BFCL-v3 Overall | BFCL-v3 MultiTurn | TAU-Bench Retail | TAU-Bench Airline | SimpleQA | HotpotQA |

|---|---|---|---|---|---|---|---|

| Qwen2.5-Max | 85.6 | 50.9 | 30.5 | 58.3 | 22.0 | 79.0 | 52.8 |

| GPT-4o-1120 | 81.9 | 69.6 | 41.0 | 62.8 | 46.0 | 82.8 | 63.9 |

| DeepSeek-V3-0324 | 83.4 | 66.2 | 35.8 | 60.7 | 32.4 | 82.6 | 54.6 |

| DeepSeek-R1 | 84.3 | 57.5 | 12.4 | 33.0 | 37.3 | 83.9 | 63.1 |

| GLM-4-32B-0414 | 87.6 | 69.6 | 41.5 | 68.7 | 51.2 | 88.1 | 63.8 |

Notably, GLM-4-32B-0414 tops the IFEval and SimpleQA categories, and matches or exceeds GPT-4o-1120 and DeepSeek in multiple other metrics, despite being significantly smaller than DeepSeek’s 671B model. This suggests the THUDM team has efficiently leveraged data quality, training techniques, and reinforcement learning to punch above their weight.

While the GLM-4-0414 series is now officially out, the ecosystem is still evolving. Some demos remain in progress, and the community can expect further refinements and benchmarks soon. Early tests shared on Reddit and other forums suggest that GLM-4-0414’s reasoning-enhanced models hold their own against established open-source competitors, with rumination particularly standing out for complex, multi-turn reasoning.

For enthusiasts and developers eager to explore beyond the usual suspects, GLM-4-0414 offers a fresh, powerful toolkit that blends Chinese AI innovation with open-source accessibility. Whether you want a heavyweight 32B reasoning beast or a nimble 9B all-rounder, this release is worth a look.

If you want to dive in, the THUDM/GLM-4 GitHub repo and the Hugging Face GLM-4-0414 collection are the places to start. Expect the chatter around GLM-4-0414 to heat up as the models get put through their paces in real-world applications and benchmarks.