OpenAI’s AI model lineup just got a fresh new member: GPT-4.1. After months of speculation, hints, and leaked model icons, the company officially unveiled this successor to the GPT-4o multimodal model last week—and it’s not just a modest upgrade. GPT-4.1 brings a bigger context window, better coding skills, smarter instruction following, and a price tag that’s friendlier for developers. But before you get too excited about a shiny new ChatGPT interface, hold your horses: GPT-4.1 is currently an API-only affair, designed with developers and AI builders in mind, not the everyday user.

Enter GPT-4.1. Rather than reinvent the wheel, OpenAI has taken GPT-4o and given it a serious makeover. According to The Verge and OpenAI’s announcements, GPT-4.1 is a “revamped” version of GPT-4o that outperforms its predecessor across the board, especially in coding and instruction-following tasks. This means fewer extraneous edits, better adherence to response structure, and more reliable tool usage—qualities crucial for developers building AI-assisted coding agents.

One of GPT-4.1’s headline features is its gargantuan context window: a whopping one million tokens. To put that in perspective, that’s roughly 750,000 words—enough to swallow War and Peace whole and still have room to chat. This is a huge leap compared to GPT-4o’s 128,000-token limit and even GPT-4 Turbo’s smaller window. OpenAI trained GPT-4.1 to reliably attend to information over this expanded range, improving its ability to notice relevant details and ignore distractions whether the input is a short chat or an epic multi-document prompt. This makes it particularly well-suited for powering AI agents that operate over long conversations or complex workflows.

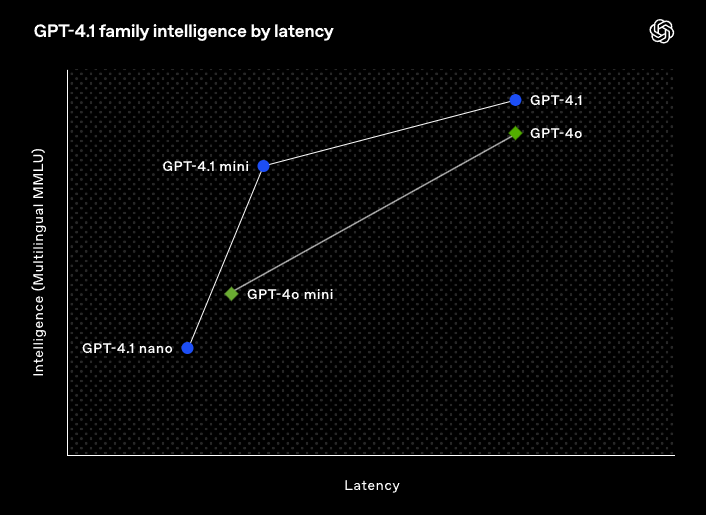

GPT-4.1 isn’t a single model but a family. Alongside the flagship GPT-4.1, OpenAI released GPT-4.1 mini and GPT-4.1 nano variants, designed to be more affordable and faster, trading off some accuracy for efficiency. The nano model is OpenAI’s “smallest, fastest, and cheapest” AI model to date, making it ideal for developers who want to experiment without breaking the bank.

GPT-4.1’s coding chops are a major focus. OpenAI boasts improvements based on direct developer feedback, emphasizing frontend coding, fewer unnecessary edits, and consistent formatting. On the SWE-bench Verified coding benchmark, GPT-4.1 scored between 52% and 54.6%, edging out GPT-4o and GPT-4o mini but still trailing behind Google’s Gemini 2.5 Pro (63.8%) and Anthropic’s Claude 3.7 Sonnet (62.3%).

If you’re a ChatGPT user eagerly awaiting GPT-4.1, don’t hold your breath just yet. OpenAI has made it clear that GPT-4.1 and its mini/nano siblings are for API developers only at launch. Meanwhile, the ChatGPT experience continues to run on GPT-4o, which recently gained new image generation features so popular that OpenAI had to throttle usage to prevent its GPUs from overheating.

OpenAI’s rollout of GPT-4.1 was originally rumored to happen as early as last week, but capacity issues and high demand for other features have caused some delays. CEO Sam Altman has candidly acknowledged that GPUs are “melting” under user load, and that new releases might be delayed or cause service slowdowns as the company manages infrastructure bottlenecks.

If you’re a developer, now might be a good time to dust off your API keys and start testing how well GPT-4.1 can handle your codebase or complex workflows. For everyone else, rest assured the AI arms race is alive and well—and OpenAI’s next big step is already here, even if it’s just behind the API curtain.