Compact AI with a punch: Phi-4-multimodal and Phi-4-mini bring enterprise-grade smarts to edge devices.

Microsoft has unveiled two new additions to its Phi family of small language models (SLMs)—Phi-4-multimodal and Phi-4-mini—that aim to disrupt the assumption that AI capability scales with parameter count. Clocking in at just 5.6 billion and 3.8 billion parameters respectively, these models punch far above their weight class, outperforming some competitors twice their size while being optimized for deployment on smartphones, factory sensors, and other devices where computational real estate is limited.

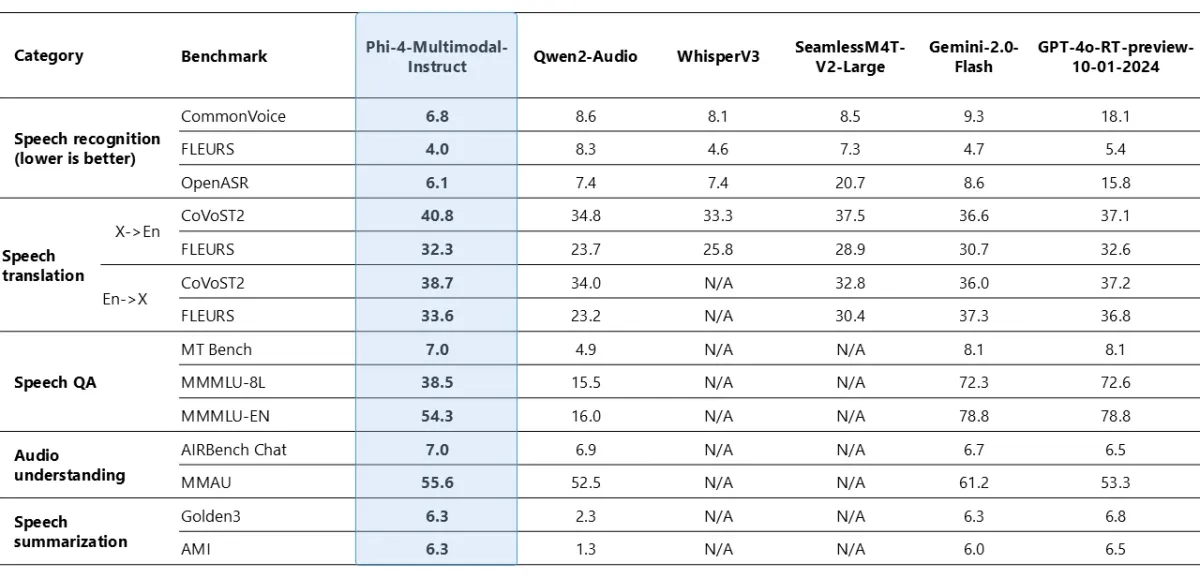

The release marks a strategic shift toward density over bulk, with Microsoft positioning these models as cost-effective alternatives to cloud-dependent giants. Phi-4-multimodal, which processes text, images, and audio through a single unified architecture, claims a 6.14% word error rate in speech recognition—besting even specialized systems like WhisperV3. Its compact sibling, Phi-4-mini, reportedly outperforms Meta’s 70B-parameter Llama 2 on coding benchmarks despite using 95% fewer computational resources.

Breaking the multimodal barrier

Where most AI systems use separate pipelines for different data types, Phi-4-multimodal employs a “mixture of LoRAs” technique to handle speech, vision, and text within a single model. This architectural tweak—detailed in technical documentation—allows simultaneous processing of multiple input types without the performance degradation that typically plagues multimodal systems. Early adopters report latency as low as 14ms for visual defect detection in manufacturing settings, compared to 290ms for cloud-based alternatives.

The model’s benchmarks tell a compelling story:

- 97.3% accuracy on chart/table understanding tasks when combining synthetic speech and visual inputs

- 64% score on the challenging MATH benchmark, outperforming several 8B-parameter rivals by over 20 points

- 87-language support for real-time document translation, including niche use cases like converting Japanese tax forms to Spanish reports

Developers can experiment with these capabilities through prebuilt Jupyter notebooks that demonstrate everything from audio-driven news article generation to cross-language code translation.

The efficiency play

Phi-4-mini takes a different approach, optimizing for text-based tasks where response time and energy efficiency matter. The 3.8B-parameter model supports 128K-token contexts—enough to analyze lengthy technical manuals or legal documents—while maintaining a footprint small enough for on-device processing. Microsoft claims it reduces cloud costs by 70% compared to larger models, with a 24% faster response time than its predecessor (Phi-3-mini) in coding tasks.

Key upgrades include:

- Function calling that lets the model interface with external APIs and databases

- Grouped-query attention to manage memory during long-context generation

- 200,000-token vocabulary for improved multilingual support

In field tests, manufacturers using Phi-4-mini for quality control reported a 92% defect detection rate versus 78% for cloud-based alternatives, according to Azure deployment data. The model’s quantized versions run on iPhones as old as the 12 Pro, opening possibilities for AI-powered mobile apps that work offline.

Safety first (but speed a close second)

Both models underwent rigorous security testing through Microsoft’s AI Red Team, which used open-source tools like PyRIT to probe for vulnerabilities. The company reports a 400% reduction in hallucination risks compared to open-source alternatives, along with 92% fewer potential data breach vectors thanks to on-device processing.

Developers can further customize the models through Azure AI Foundry, which offers:

- Automated evaluations for bias and toxicity

- Tools to fine-tune models for domain-specific tasks (e.g., medical imaging analysis)

- Prebuilt templates for IoT deployments

A three-hour training session on 16 A100 GPUs reportedly boosted Phi-4-multimodal’s English-to-Indonesian speech translation accuracy from 17.4% to 35.5%—a testament to the models’ adaptability.

The edge AI arms race heats up

By making both models available through Azure, Hugging Face, and NVIDIA’s API catalog, Microsoft is clearly targeting enterprises wary of cloud dependency. The strategy mirrors Apple’s reported plans to offer multiple AI model options in future iOS updates, but with a twist: Phi-4-multimodal’s cross-modal capabilities could give it an early lead in applications like augmented reality and autonomous systems.