Circavoyant

Lighting the fuse on tech topics that haven't exploded—yet.

Read Our Latest Posts

Latest Posts

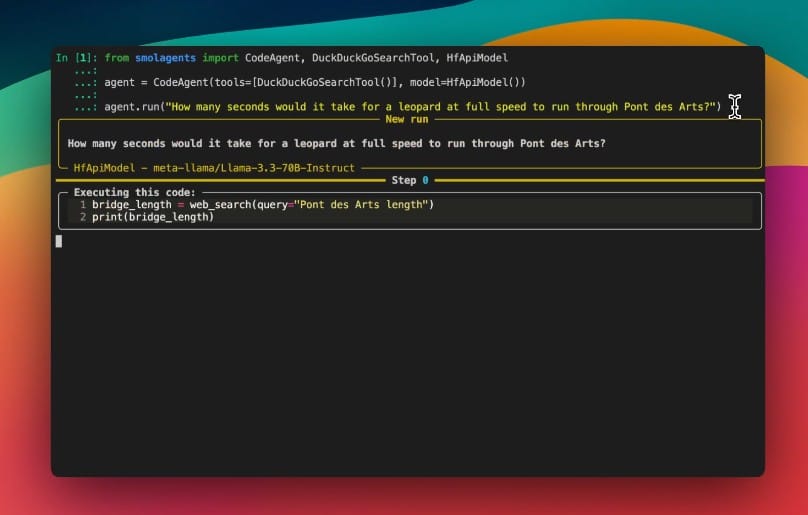

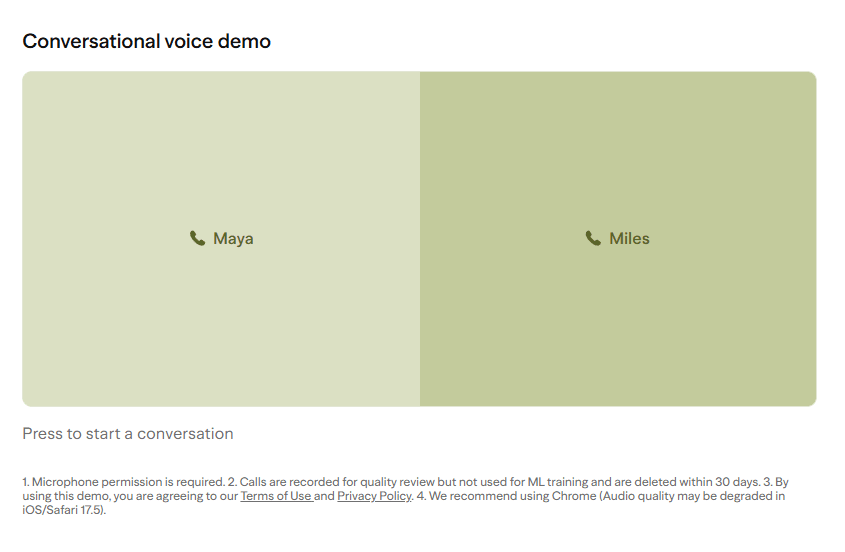

Click here to demo Sesame's Conversational AI Preliminary testing on my part made me have goosebumps talking to this AI. So much so that I'd urge you to try it for yourself. I'm writing this article knowing that it's way better than

Yikes. Though, if its creative ability are of the same 'magic' as Claude 3 Opus, perhaps it can justify its pricing and lukewarm benchmark results. At least a little bit. OpenAI’s latest large language model, GPT-4.5, has landed with promises of improved efficiency and broader knowledge—

If you’ve ever tried to extract clean, readable text from a PDF—whether it’s a scanned historical document or a modern, multi-column academic paper—you’ve likely felt the unique frustration of wrestling with jumbled paragraphs, fractured tables, and phantom line breaks. Now, an open-source tool called olmOCR

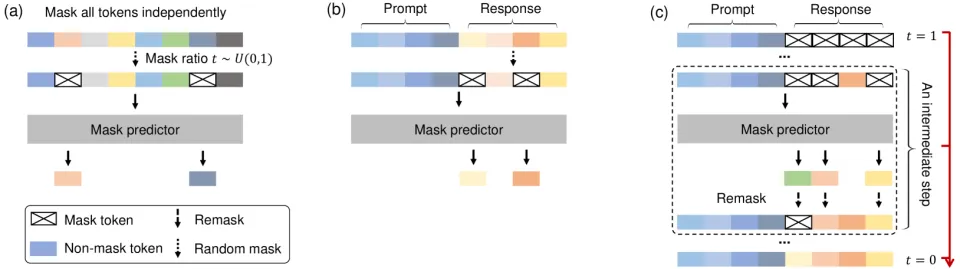

For years, the AI world has operated under one fundamental assumption: that large language models must predict text sequentially, word by word, to achieve human-like capabilities. A groundbreaking new study challenges that paradigm through an unlikely contender – a diffusion model called LLaDA that generates text through iterative refinement rather than

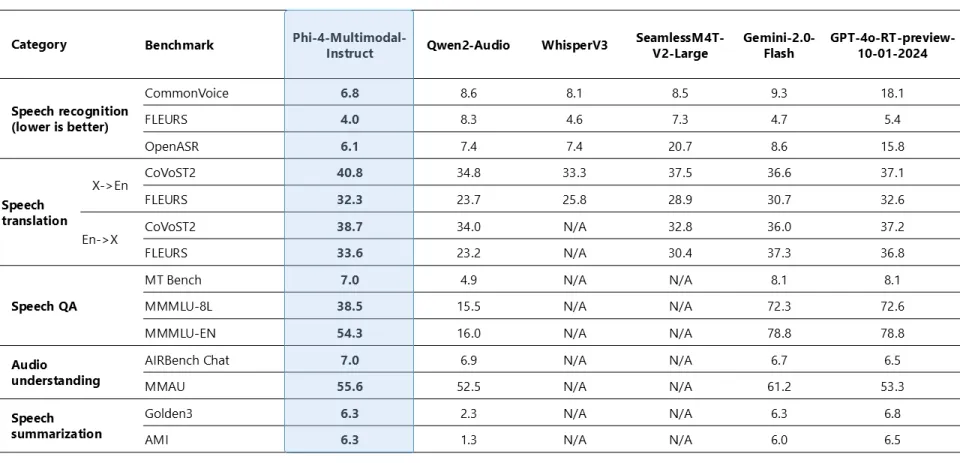

Compact AI with a punch: Phi-4-multimodal and Phi-4-mini bring enterprise-grade smarts to edge devices. Microsoft has unveiled two new additions to its Phi family of small language models (SLMs)—Phi-4-multimodal and Phi-4-mini—that aim to disrupt the assumption that AI capability scales with parameter count. Clocking in at just 5.

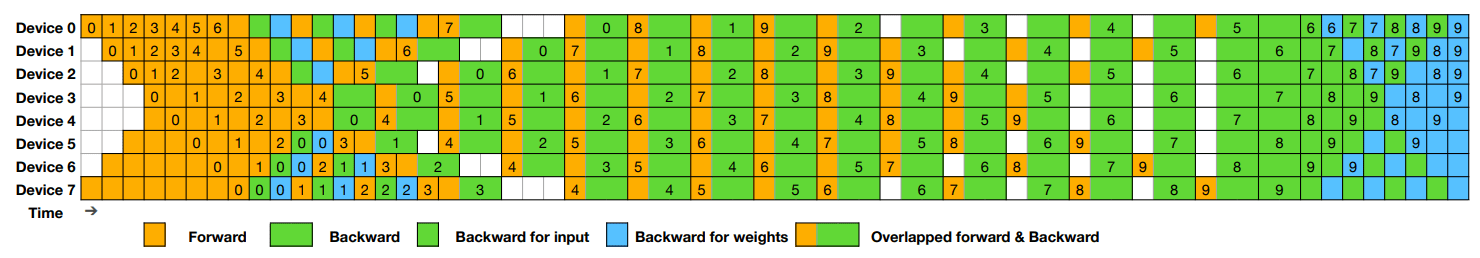

Chinese lab targets one of AI’s biggest bottlenecks with bidirectional parallelism and dynamic load balancing. Training large AI models has always been a high-stakes game of computational Tetris—fitting layer after layer of parameters across GPUs without leaving processors idle or networks clogged. This week, Chinese AI lab DeepSeek

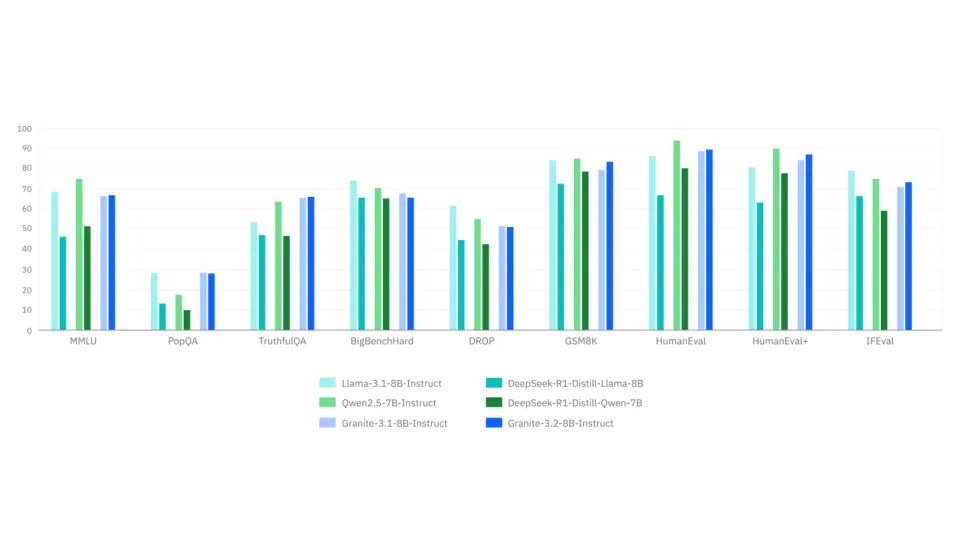

IBM has unveiled Granite 3.2, its latest open-source AI model family designed for enterprise use cases—and it’s making bold claims about performance relative to much larger systems like GPT-4o and Claude 3.5 Sonnet. The release introduces reasoning enhancements, multimodal document analysis tools, slimmer safety models, and

One month after releasing its cost-efficient DeepSeek-R1 language model—Chinese AI developer DeepSeek took an unexpected turn: open-sourcing three critical components of its machine learning infrastructure over consecutive days. The latest release targets one of AI’s most fundamental operations—matrix multiplication—with a CUDA-powered library claiming record-breaking performance on

The move could balance server demand while courting global developers. Could it trigger a pricing war in an already cutthroat AI market? DeepSeek, a rising Chinese AI startup challenging Western giants like OpenAI and Meta’s Llama, has unveiled an aggressive discount program offering up to 75% off API access