Circavoyant

Lighting the fuse on tech topics that haven't exploded—yet.

Read Our Latest Posts

Latest Posts

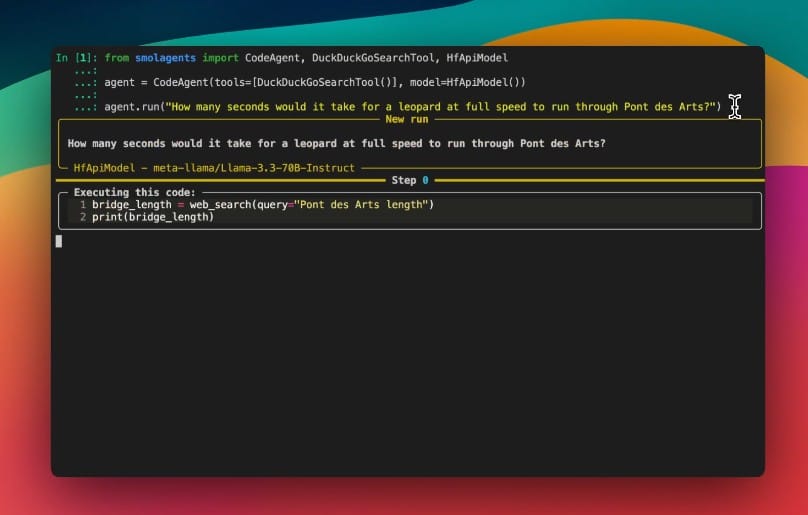

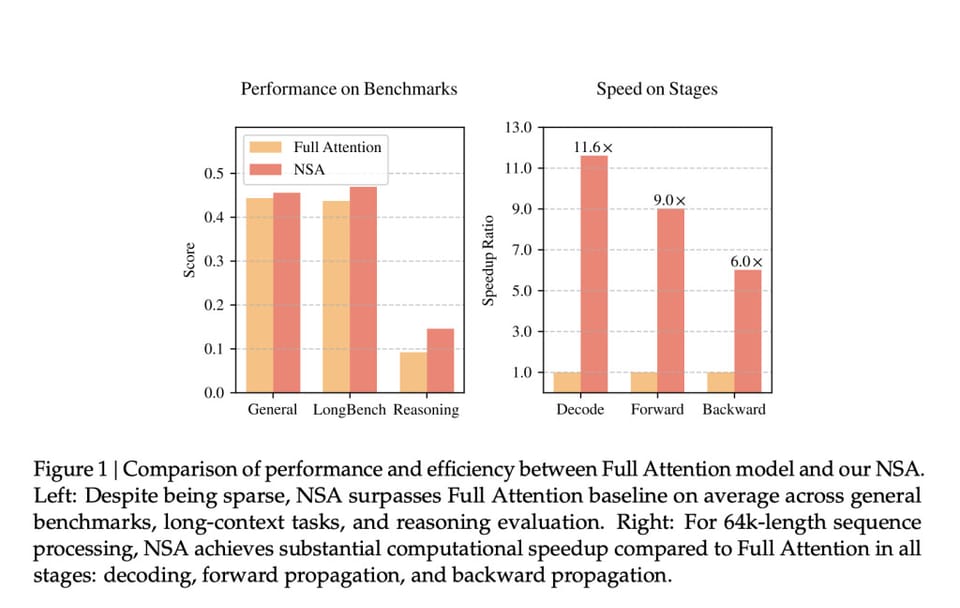

As large language models push into territory once reserved for human cognition—analyzing entire code repositories, summarizing novels, or maintaining coherent conversations spanning hours—the computational demands of traditional attention mechanisms have become unsustainable. Chinese AI lab DeepSeek’s newly proposed Native Sparse Attention (NSA) tackles this challenge through a

Elon Musk’s xAI has unveiled Grok 3, a new large language model positioned as a competitor to OpenAI’s GPT-4o, Google’s Gemini, and China’s DeepSeek. The company claims the model achieves “superhuman reasoning” through a combination of architectural upgrades and synthetic training data, though independent researchers urge

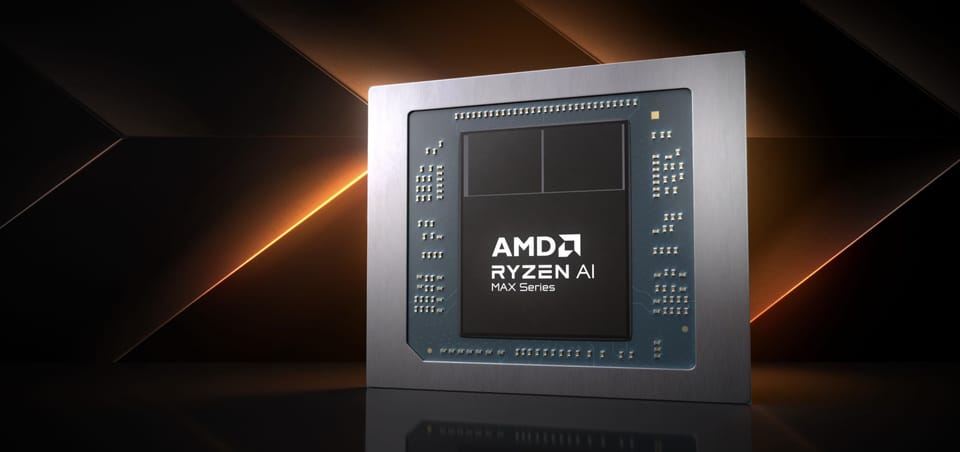

We compiled a comprehensive, benchmark‐focused deep dive that weaves together every test—from CPU and GPU synthetic scores to real‐world gaming, content creation, AI workloads, and battery life. The data is consolidated from multiple sources (Dave2D, Hardware Canucks, Just Josh, NotebookCheck, and The Phawx) with notes on power

Click here for comprehensive benchmark numbers! AMD’s latest Ryzen AI Max 395+ APU, codenamed "Strix Halo," isn’t just another chip—it’s a statement that integrated graphics may finally rival discrete foes. Paired with Asus’s redesigned ROG Flow Z13 hybrid gaming tablet, this silicon challenges

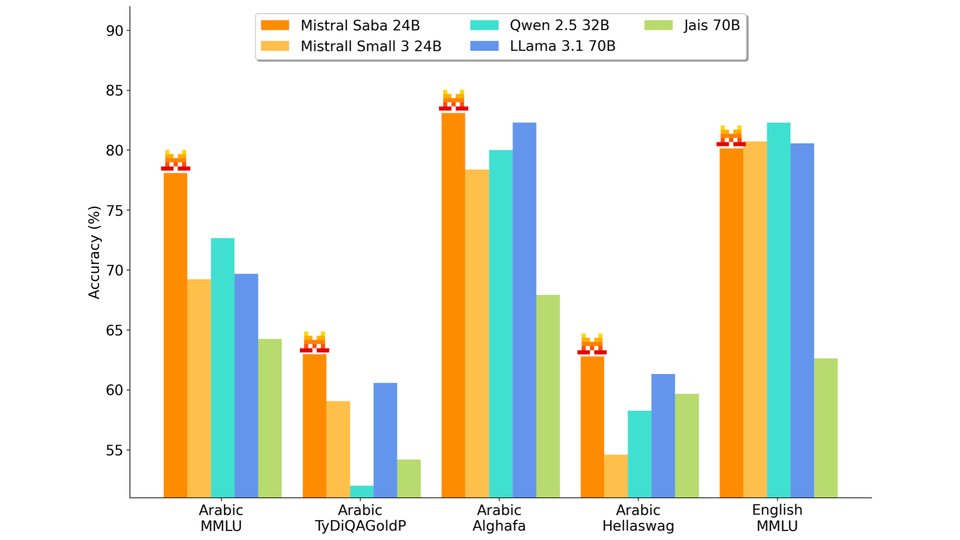

This article was originally written in English. As an experiment and for your own assessment, we have included translations provided by Mistral Saba هذا المقال كتب أصلاً باللغة الإنجليزية. كتجربة ولتقييمك الخاص، قمنا بتضمين الترجمات التي قدمتها ميسترال سابا இந்த கட்டுரை முதலில் ஆங்கிலத்

A new method translates programming logic into natural language to boost problem-solving flexibility. Large language models have become adept at narrow tasks like solving math problems or writing code snippets. But when it comes to flexible, cross-domain reasoning—connecting logical dots between scientific concepts or untangling multi-step real-world puzzles—their

Large language models can draft emails, summarize meetings, and even tell a decent joke. But ask one to untangle a thorny supply chain problem or debug a complex algorithm, and it might flounder—or worse, confidently spit out a plausible-sounding fiction. A new study reveals how two key upgrades—structured

A new tool from Microsoft aims to give AI models better “eyes” for navigating the messy world of graphical interfaces—without peeking under the hood. Released this week on Hugging Face and GitHub, OmniParser V2 converts screenshots of apps or websites into structured data that AI agents can parse. The

Edited Feb 16, 2025 Hugging Face-led collaboration proves smaller models can punch above their weight with specialized training. A coalition of AI researchers has pulled back the curtain on OpenR1-Qwen-7B—an open-weights language model that replicates the mathematical prowess of China’s cutting-edge DeepSeek-R1 through collaborative engineering. The project demonstrates