The world of large language models (LLMs) has been exploding in recent years, with innovations ranging from Meta’s LLaMA models to Huggingface’s smolagents and lightweight runtimes like llama.cpp. While OpenAI and other providers dominate with cloud-based APIs, a vibrant ecosystem has emerged around running capable models locally — on your own hardware, often without any cost beyond electricity and a bit of elbow grease. If you’ve been curious about how to set up a fully local AI agent system that's both powerful and privacy-respecting, then this deep dive into smolagents, llama-cpp-python, and Ollama will get you started.

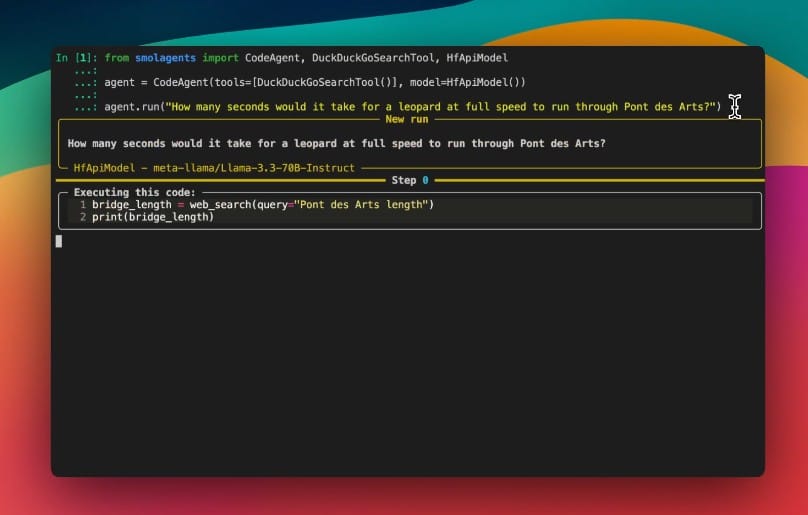

At the intersection of simplicity and power lies smolagents, a minimalist yet potent Python library developed by Huggingface that lets you build agentic AI systems with surprisingly little code. Unlike many agent frameworks that treat tool invocations as JSON blobs or text commands, smolagents’ signature feature is the CodeAgent, which writes its actions as executable Python code snippets. This approach reportedly reduces the number of LLM calls by about 30% and boosts performance on challenging benchmarks — a neat trick that also opens doors for sandboxed and secure execution environments via Docker or E2B.

But smolagents themselves don’t do the heavy lifting of running the LLMs. Instead, they are model-agnostic and can integrate with a broad range of backends — including remote APIs from OpenAI, Anthropic, or Huggingface, as well as local models. This is where Ollama and Llama 3.2B step in. The Llama 3.2B model is a quantized version of the larger LLaMA family, preserving much of the performance at a fraction of the size and compute cost. This makes it an ideal candidate for local experimentation with AI agents.

The installation flow is straightforward for Windows users:

-

Download and install Ollama from the official site.

-

Verify Ollama installation by running

ollamain the command prompt. -

Pull the Llama 3.2B model:

ollama pull llama3.2:3b. -

Create a Python virtual environment, activate it, and install smolagents and accelerate:

pip install smolagents accelerate -

Write a simple Python script to test the integration:

from smolagents import CodeAgent, LiteLLMModel model = LiteLLMModel(model_id="ollama_chat/llama3.2:3b", api_key="ollama") agent = CodeAgent(tools=[], model=model, add_base_tools=True, additional_authorized_imports=['numpy', 'sys', 'wikipedia', 'scipy', 'requests', 'bs4']) agent.run("Solve the quadratic equation 2*x + 3x^2 = 0?")

The agent will generate Python code to solve the equation, execute it, and return the answer. This demonstrates the seamless interplay of smolagents’ Python-native agent logic with a local LLM backend powered by Ollama and Llama 3.2B.

While Ollama provides a polished local runtime and model distribution, the underlying technology that powers many local LLM deployments is llama.cpp, an open-source C/C++ inference engine originally developed by Georgi Gerganov. llama-cpp-python is the Python binding to llama.cpp, offering both low-level access to the native API and a high-level, OpenAI API–compatible interface. This combo allows you to run LLaMA models and many derivatives on a wide range of hardware — from your laptop CPU to Apple Silicon GPUs (via Metal) and even NVIDIA GPUs (via CUDA). It supports multiple quantization levels (1.5-bit to 8-bit), hybrid CPU+GPU inference, and even multi-modal models that handle images alongside text.

Installing llama-cpp-python typically involves building from source, which ensures maximum performance tailored to your system. You can enable various acceleration backends such as OpenBLAS for CPUs, CUDA for NVIDIA GPUs, Metal for Macs, and Vulkan or SYCL for other GPUs by setting environment variables during installation. For example:

CMAKE_ARGS="-DGGML_CUDA=on" pip install llama-cpp-python

Pre-built wheels are also available for certain configurations, including CUDA and Metal support. Example of a basic text completion with llama-cpp-python:

from llama_cpp import Llama

llm = Llama(model_path="./models/7B/llama-model.gguf")

output = llm("Q: Name the planets in the solar system? A:", max_tokens=32, stop=["Q:", "\n"], echo=True)

print(output)

The llama.cpp project itself is a sprawling playground not just for inference but also for tooling, model conversion, and integration. It supports a vast array of models — from LLaMA 1 through 3, Mistral, Falcon, and many experimental architectures — and offers diverse bindings in languages ranging from Python and Rust to Swift and JavaScript. For those wanting to run local LLMs at scale or integrate them into applications, llama.cpp and its Python bindings represent the state of the art for open-source, hardware-optimized inference.

With the combination of Huggingface’s smolagents library, the Ollama runtime, and the Llama 3.2B model, developers now have a straightforward path to running capable AI agents locally without sinking costs into cloud APIs or sacrificing privacy. Meanwhile, the broader llama.cpp ecosystem, accessible through llama-cpp-python, unlocks deep customization, hardware acceleration, and multi-modal capabilities for enthusiasts and researchers alike.